🕰️ A Brief History of Natural Language Processing

Part 1 - Natural Language Processing Series

This blog post was originally featured on the Cape AI Medium page.

This is the first blog post in a series focusing on the wonderful world of Natural Language Processing (NLP)! In this post I’ll present you with the lay of the land — describing seven important milestones which have been achieved in the NLP field over the last 20 years. This is largely inspired by Sebastian Ruder’s talk at the 2018 Deep Learning Indaba which I attended in Stellenbosch.

Short disclaimer before I begin: This post is heavily skewed towards neural network-based advancements. Many of these milestones, however, were built on many influential ideas presented by non-neural network-based work during the same era, which, for brevity purposes, have been omitted from this post.

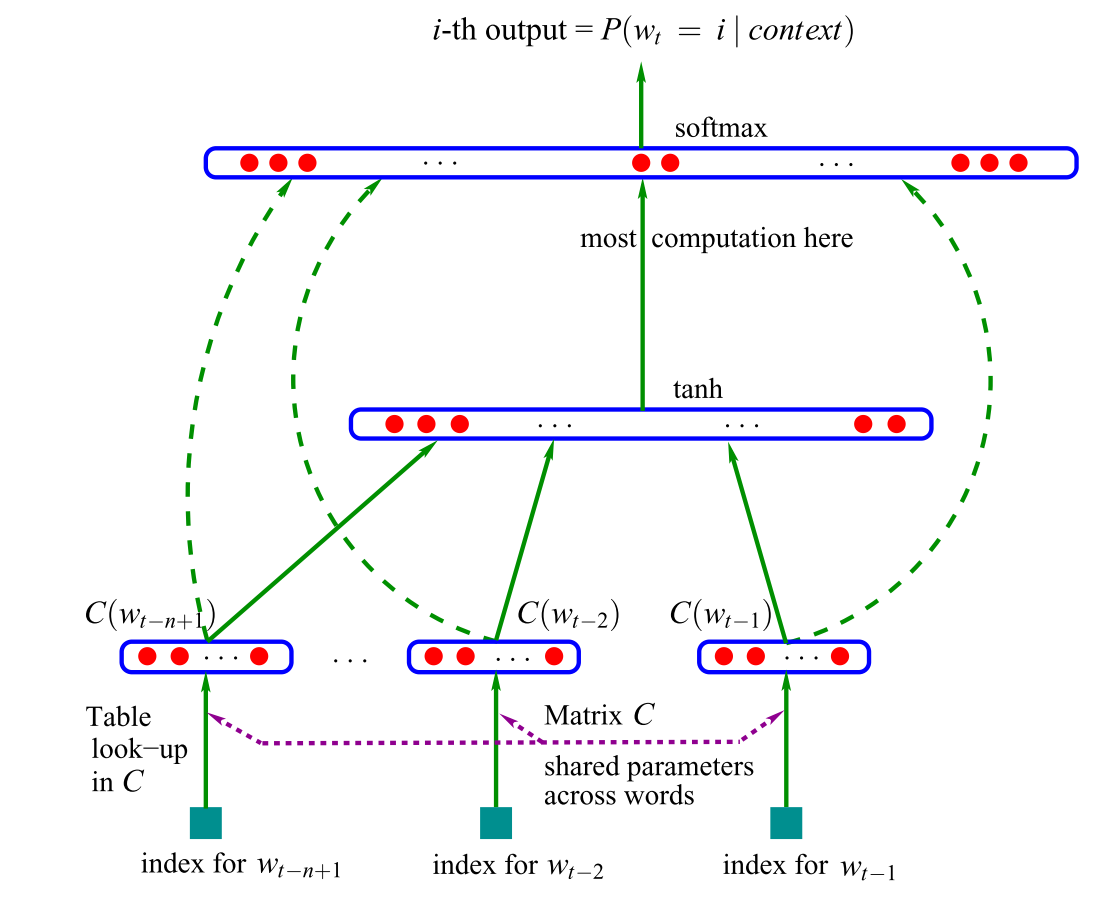

1. Neural Language Models (2001)

It’s 2001 and the field of NLP is quite nascent. Academics all around the world are beginning to think more about how language could be modelled. After a lot of research, Neural Language models are born. Language modelling is simply the task of determining the probability of the next word (often referred to as a token) occurring in a piece of text given all the previous words. Traditional approaches for tackling this problem were based on n-gram models in combination with some sort of smoothing technique

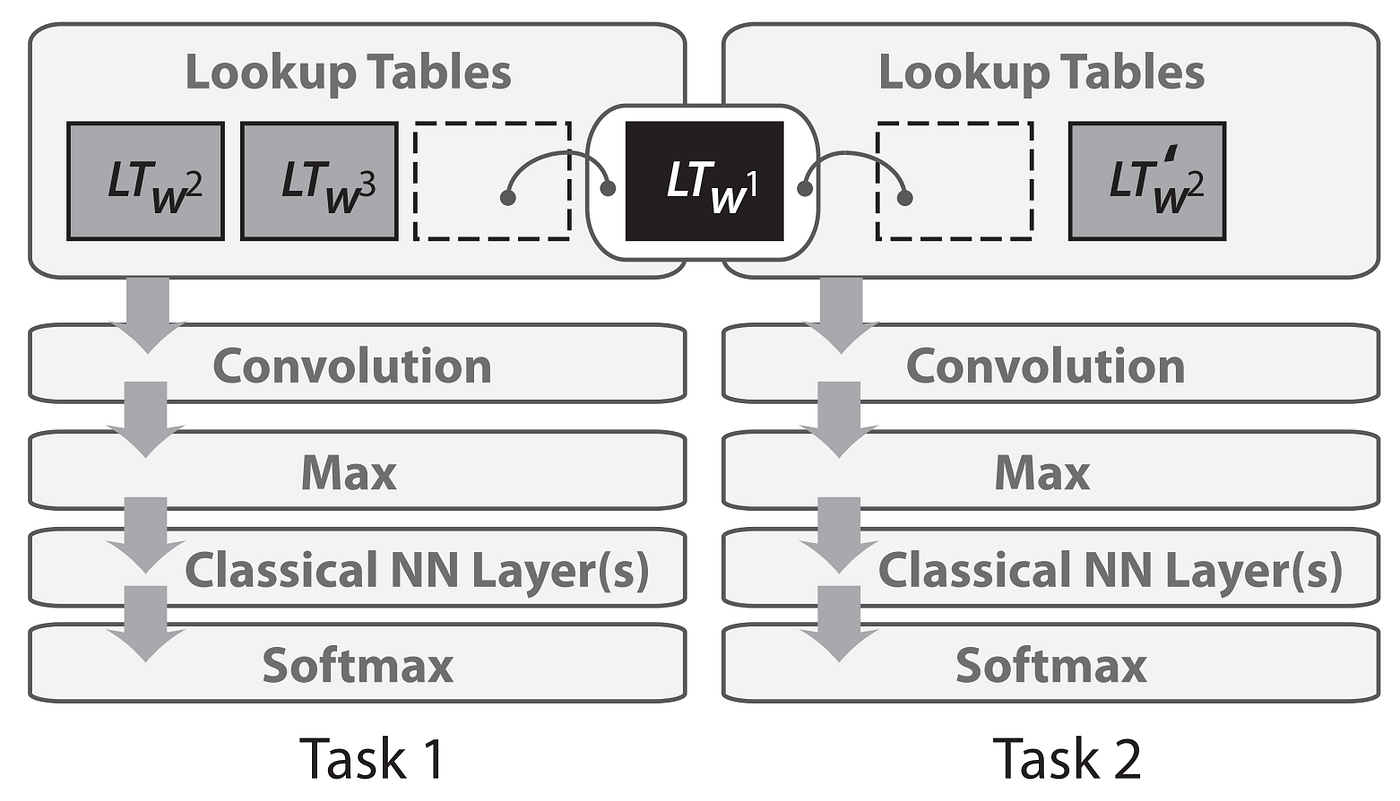

2. Multi-task Learning (2008)

Excitement and interest grows steadily in the years following Neural Language models. Advances in computer hardware allow researchers to push the boundaries on language modelling, giving rise to new NLP methods. One such method is multi-task learning. The notion of multi-task learning involves training models to solve more than one learning task, while also using a set of shared parameters. As a result, models are forced to learn a representation that exploits the commonalities and differences across all tasks.

Collobert and Weston

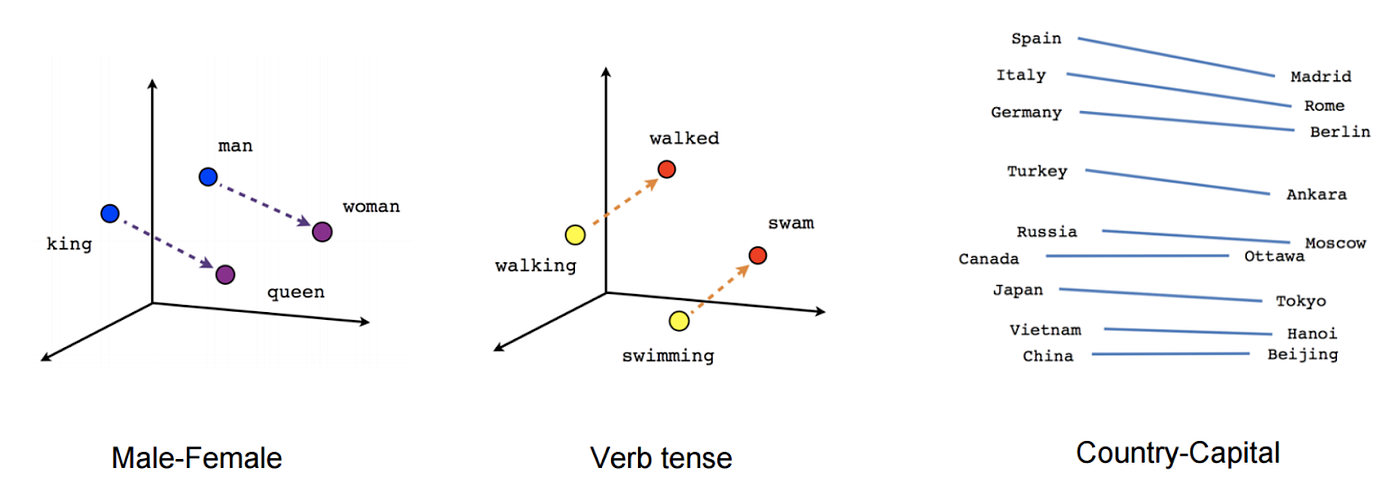

3. Word Embeddings (2013)

If you’ve had any exposure to NLP, the first thing you have probably come across is the idea of word embeddings (or more commonly known as word2vec). Although we have seen that word embeddings have been used as far back as 2001, in 2013 Mikolov et al.

Word embeddings attempt to create a dense vector representation of text, and addresses many challenges faced with using traditional sparse bag-of-words representation. Word embeddings were shown to capture every intuitive relationship between words such as gender, verb tense and country capital, as illustrated in Figure 3.

4. Neural Networks for NLP (2013)

Looking back, 2013 appeared to be an inflection point in the NLP field, as research and development grew exponentially thereon. The advancements in word embeddings ultimately sparked the wider application of neural networks in NLP. The key challenge that needed to be addressed was architecturally allowing sequences of variable lengths to be inputted into the neural net which ultimately lead to three architectures emerging, namely: recurrent neural networks (RNNs) (which were soon replaced by long-short term memory (LSTM) networks), convolutional neural networks (CNNs), and recursive neural networks. Today, these neural network architectures have produced exceptional results and are widely used for many NLP applications.

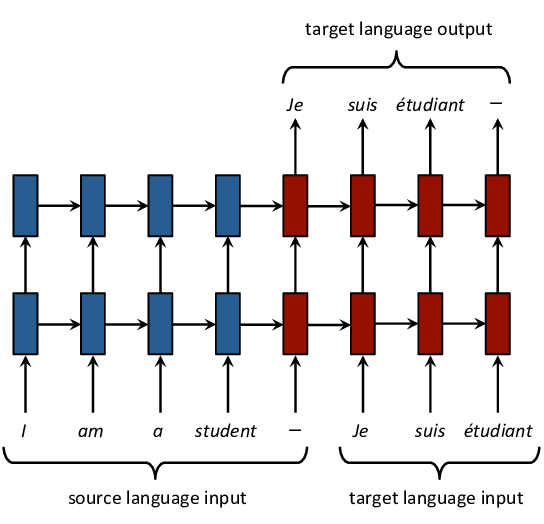

5. Sequence-to-Sequence Models (2014)

Soon after the emergence of RNNs and CNNs for language modelling, Sutskever et al.

This architecture is particularly useful in tasks such as machine translation (MT) and natural language generation (NLG). It’s no surprise that, in 2016, Google announced that it is in the process of replacing all of its statistical-based MT systems with neural MT models

6. Attention Mechanisms (2015)

Although useful in a wide range of tasks, sequence-to-sequence models were struggling with being able to capture long-range dependencies between words in text. In 2015, the concept of attention was introduced by Bahdanau et al.

Attention is not only restricted to the input sequence and can also be used to focus on surrounding words in a body of text — commonly referred to as self attention — to obtain more contextual meaning. This is at the heart of the current state-of-the-art transformer architecture, proposed by Vaswani et al.

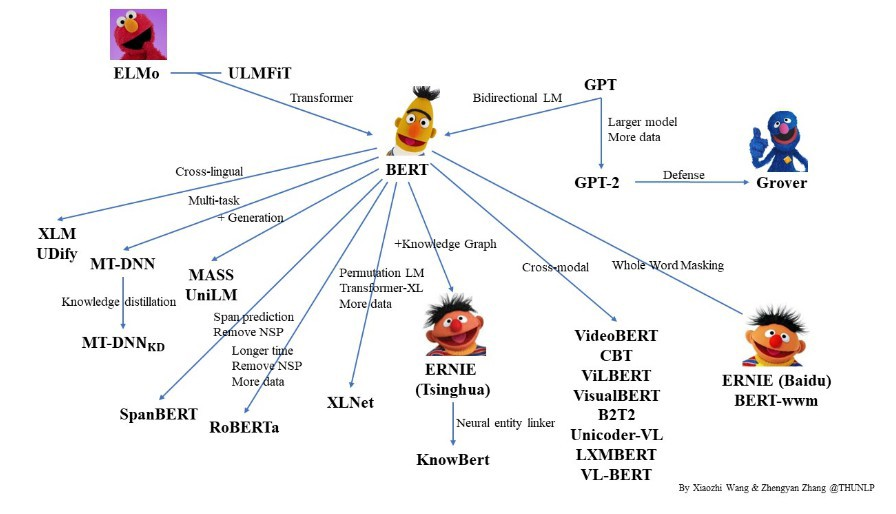

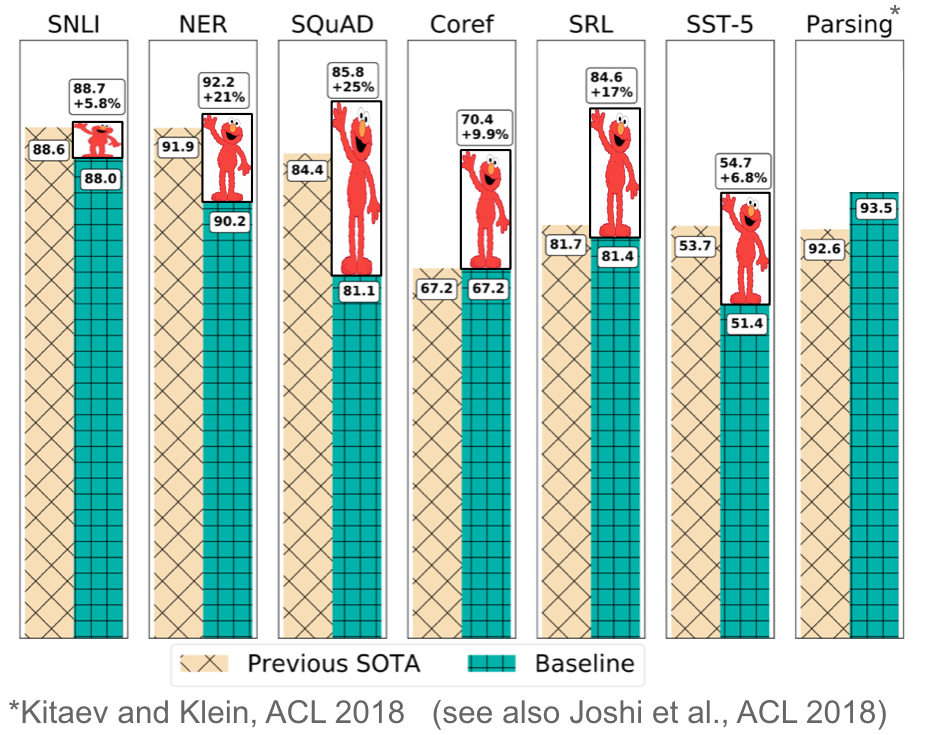

7. Pre-trained Language Models (2018)

Dai & Le

8. Where we are today and looking forward…

Nowadays, there exists an array of initiatives aimed at open-sourcing many large state-of-the-art pre-trained models. These models can be fine-tuned to perform various NLP-related tasks like sequence classification, extractive question answering, named entity recognition and text summarization (to name a few). NLP is advancing at an incredible pace and is giving rise to global communities dedicated to solving the world’s most important problems through language understanding.

Stay tuned to this series to learn more about the awesome world of NLP as I share more on the latest developments, code implementations and thought-provoking perspectives on NLP’s impact on the way we interact with the world. It’s an extremely exciting time for anyone to get into the world of NLP!